✍ Topic : Generative Adversarial Network(GAN)

👉 Learn before: Analogy of Generative Adversarial Network(GAN)

Read

👉Part : 02

Introduction

GANs are a hot topic of research today in the field of deep learning. Popularity has soared with this architecture style, with its ability to produce generative models that are typically hard to learn. There are a number of advantages to using this architecture: it generalizes with limited data, conceives new scenes from small datasets, and makes simulated data look more realistic.

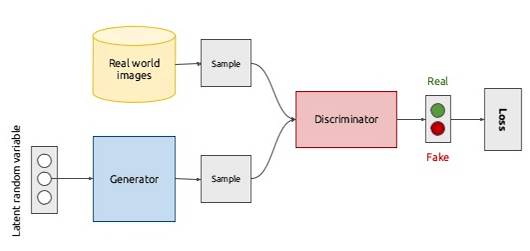

The GAN composes of three different names such as Generative, Adversarial and Network. Generative means we will generate some probability distribution which becomes close to the original data that we want to approximate. Adversarial means conflict or opposition. So, there will be two networks that we call them discriminator and generator. So, these two neutral networks fight against each other in order to learn the probability distribution function. So adversarial comes because of this reaso

Two models in GAN

______________________________👉 A. Generator model:

A generator network uses existing data to generate new data. It can, for example, use existing images to generate new images. The generator's primary goal is to generate data (such as images, video, audio, or text) from a randomly generated vector of numbers, called a latent space. While creating a generator network, we need to specify the goal of the network. This might be image generation, text generation, audio generation, video generation, and so on.

👉 B. Discriminative model:

The discriminator network tries to differentiate between the real data and the data generated by the generator network. The discriminator network tries to put the incoming data into predefined categories. It can either perform multiclass classification or binary classification. Generally, in GANs binary classification is performed.

The generator is a neural network G (z, Ɵ1). Its role is to map the input noise variable to the desired data space x (say a real image). The Discriminator is the second neural network D (x, Ɵ2) that outputs the probability that the data come from the real datasets in range (0, 1). In both cases, Ɵi represents the weights of each neural network.

The Discriminator is trained to correctly classify the input data as either real or fake. Its weights are updated to maximize the probability that any real dataset x is classified as real while minimizing the probability that any fake image is classified as real sample. In more technical terms, the loss/error function uses to maximize the function D(x) and minimize the function D(G(z)). The generator is trained to fool the Discriminator by generating data as realistic as possible. The generator weights are optimized to maximize the probability that any fake image classified as a real sample. Finally, this means that the loss/error function used to maximize function D(G(z)).

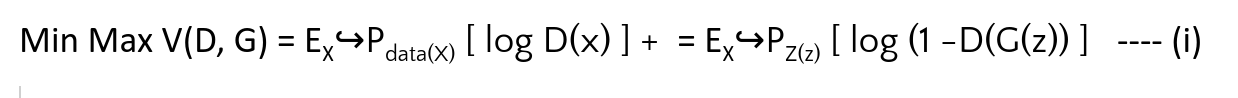

👉 Objective Function

Here the Objective function of GAN

From equation(i):

- Pdata(x) ➨ The distribution of real data

- X ➨ sample from Pdata

- P(z) ➨ Distribution of generator

- G(z) ➨ Generator network

- D(x) ➨ Discriminator network

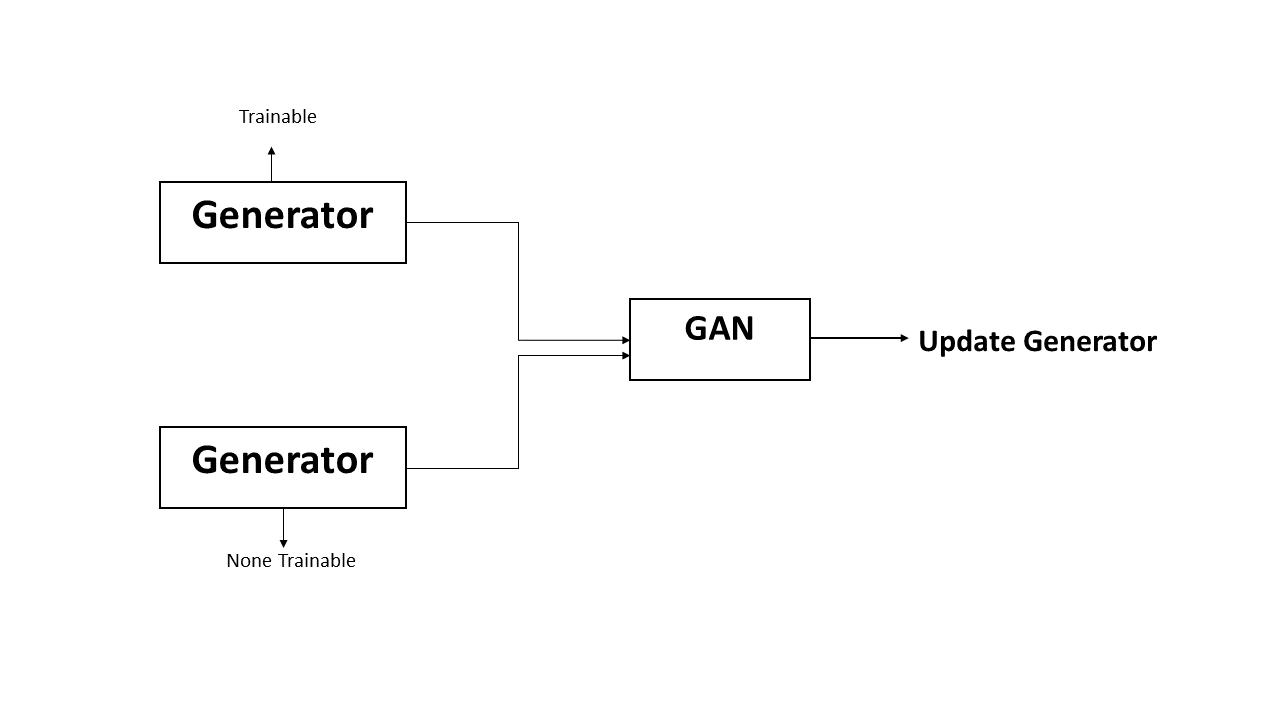

👉 Adversarial Training

Adversarial network takes both the Discriminator and Generator model as input. It will focus on setting these two models into a combined model that will be able to train with latent example or noise.

NoteWe set Discriminator trainable false, wheres generator trainable true, meaning during training the discriminator is not able to update its weights. In contrast, the generator updates its weights by training. The generator consistently doing batter and batter while discriminator remains unchanged.

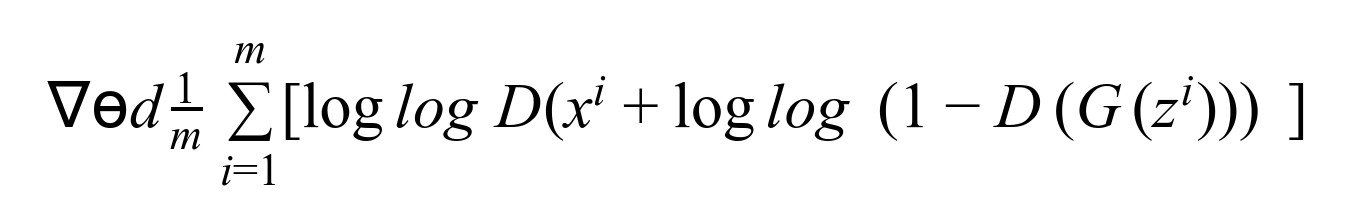

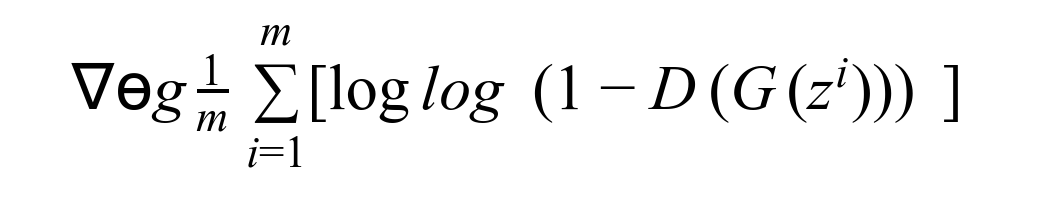

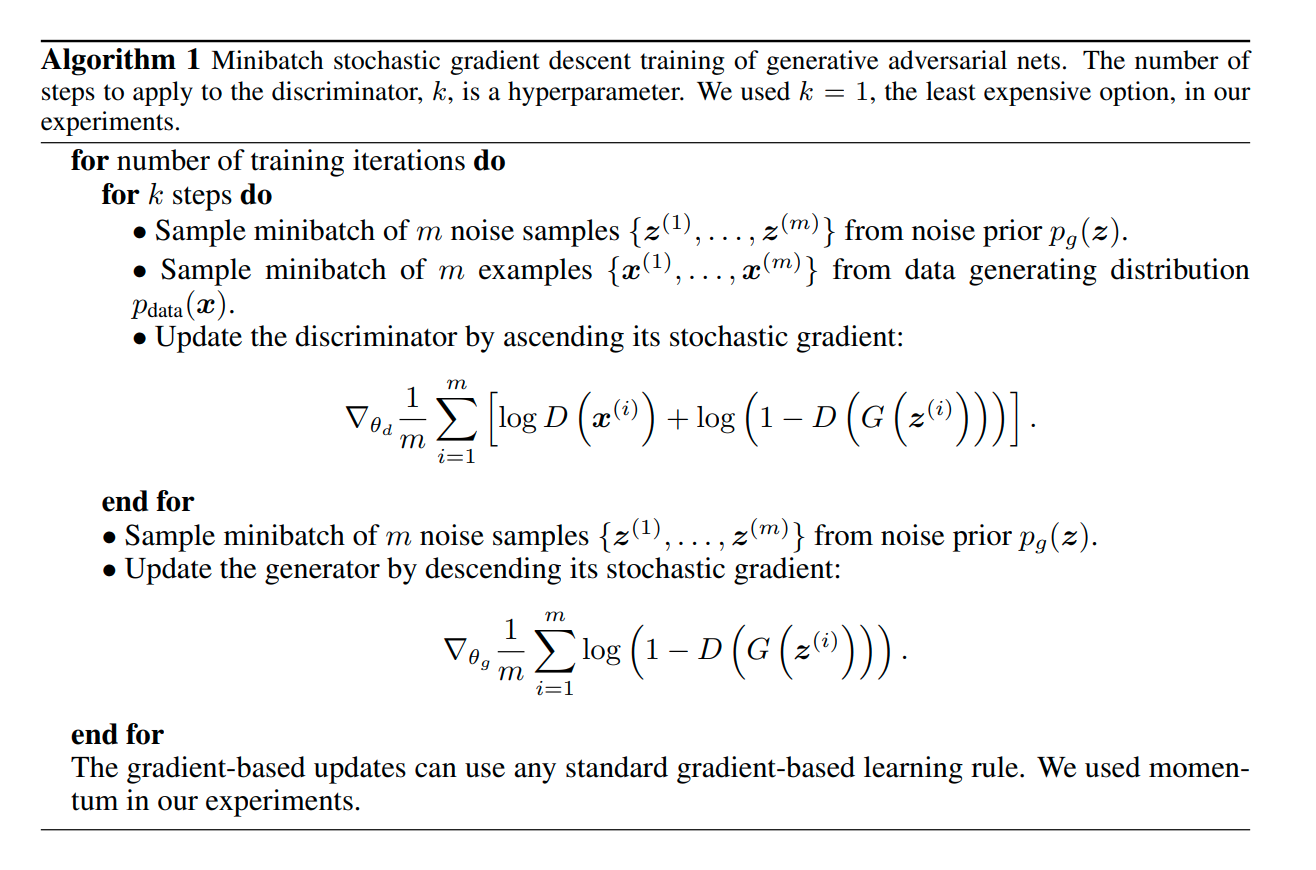

👉 Training Algorithm

👉 Application of GAN

GAN applications into the following areas:

- Generate Examples for Image Datasets

- Semantic-Image-to-Photo Translation

- Generate Photographs of Human Faces

- Generate Realistic Photographs

- Face Frontal View Generation

- Generate Cartoon Characters

- Image-to-Image Translation

- Text-to-Image Translation

- Generate New Human Poses

- 3D Object Generation

- Clothing Translation

- Photograph Editing

- Video Prediction

- Photos to Emojis

- Photo Inpainting

- Super Resolution

- Photo Blending

- Face Aging

👉 Problem of GAN

GANs have a number of common failure modes. All of these common problems are areas of active research. While none of these problems have been completely solved, we'll mention some things that people have tried.

None convergence: The model parameter oscillate, destabilize and never converge. Cost function may not converge by using gradient descent in a common minimize game.

Model Collapse: The gradient collapse which produce limited varieties of sample.

Diminished gradient: The discriminator gets too successful that the generator gradient vanished and learns nothing.

- Unbalanced between generator and discriminator causing overfitting

- Highly sensitive to select hyperparamter

- Text-to-Image Translation