👉Part : 01

✍ Topic : Generative Adversarial Network(GAN)

Analogy

The easiest way to understand what GANs are is through a simple analogy:

Suppose there is a shop which buys certain kinds of wine from customers which they will later resell.

However, there are nefarious customers who sell fake wine in order to get money. In this case, the shop owner has to be able to distinguish between the fake and authentic wines.

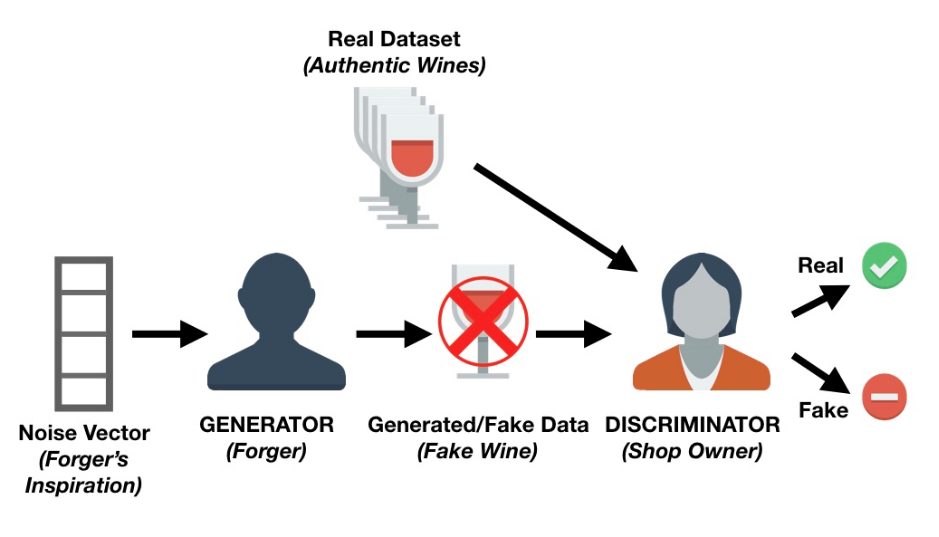

You can imagine that initially, the forger might make a lot of mistakes when trying to sell the fake wine and it will be easy for the shop owner to identify that the wine is not authentic. Because of these failures, the forger will keep on trying different techniques to simulate the authentic wines and some will eventually be successful. Now that the forger knows that certain techniques got past the shop owner's checks, he can start to further improve the fake wines based on those techniques.

At the same time, the shop owner would probably get some feedback from other shop owners or wine experts that some of the wines that she has are not original. This means that the shop owner would have to improve how she determines whether a wine is fake or authentic. The goal of the forger is to create wines that are indistinguishable from the authentic ones, and the goal of the shop owner is to accurately tell if a wine is real or not.

👉 Components of a Generative Adversarial Network

Using the example above, we can come up with the architecture of a GAN.

There are two major components within GANs: the generator and the discriminator. The shop owner in the example is known as a discriminator network and is usually a convolutional neural network (since GANs are mainly used for image tasks) which assigns a probability that the image is real.

The forger is known as the generative network, and is also typically a convolutional neural network (with deconvolution layers). This network takes some noise vector and outputs an image. When training the generative network, it learns which areas of the image to improve/change so that the discriminator would have a harder time differentiating its generated images from the real ones.

The generative network keeps producing images that are closer in appearance to the real images while the discriminative network is trying to determine the differences between real and fake images. The ultimate goal is to have a generative network that can produce images which are indistinguishable from the real ones.

Another Analogy

You can think of a GAN as the opposition of a counterfeiter and a cop in a game of cat and mouse, where the counterfeiter is learning to pass false notes, and the cop is learning to detect them. Both are dynamic; i.e. the cop is in training, too (to extend the analogy, maybe the central bank is flagging bills that slipped through), and each side comes to learn the other’s methods in a constant escalation.

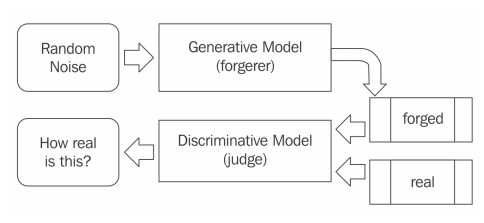

The key intuition of GAN can be easily considered as analogous to art forgery, which is the process of creating works of art (https://en.wikipedia.org /wiki/Art) that are falsely credited to other, usually more famous, artists. GANs train two neural nets simultaneously, as shown in the next diagram. The generator G(Z) makes the forgery, and the discriminator D(Y) can judge how realistic the reproductions based on its observations of authentic pieces of arts and copies are. D(Y) takes an input, Y, (for instance, an image) and expresses a vote to judge how real the input is--in general, a value close to zero denotes real and a value close to one denotes forgery. G(Z) takes an input from a random noise, Z, and trains itself to fool D into thinking that whatever G(Z) produces is real.

So, the goal of training the discriminator D(Y) is to maximize D(Y) for every image from the true data distribution, and to minimize D(Y) for every image not from the true data distribution. So, G and D play an opposite game; hence the name adversarial training. Note that we train G and D in an alternating manner, where each of their objectives is expressed as a loss function optimized via a gradient descent. The generative model learns how to forge more successfully, and the discriminative model learns how to recognize forgery more successfully. The discriminator network (usually a standard convolutional neural network) tries to classify whether an input image is real or generated. The important new idea is to backpropagate through both the discriminator and the generator to adjust the generator's parameters in such a way that the generator can learn how to fool the the discriminator for an increasing number of situations. At the end, the generator will learn how to produce forged images that are indistinguishable from real ones:

Of course, GANs require finding the equilibrium in a game with two players. For effective learning it is required that if a player successfully moves downhill in a round of updates, the same update must move the other player downhill too. Think about it! If the forger learns how to fool the judge on every occasion, then the forger himself has nothing more to learn. Sometimes the two players eventually reach an equilibrium, but this is not always guaranteed and the two players can continue playing for a long time.

👉 Learn more about GAN network and algorithm here.

Read

👉 Application of GAN

GAN applications into the following areas:

- Generate Examples for Image Datasets

- Semantic-Image-to-Photo Translation

- Generate Photographs of Human Faces

- Generate Realistic Photographs

- Face Frontal View Generation

- Generate Cartoon Characters

- Image-to-Image Translation

- Text-to-Image Translation

- Generate New Human Poses

- 3D Object Generation

- Clothing Translation

- Photograph Editing

- Video Prediction

- Photos to Emojis

- Photo Inpainting

- Super Resolution

- Photo Blending

- Face Aging

👉 Problem of GAN

GANs have a number of common failure modes. All of these common problems are areas of active research. While none of these problems have been completely solved, we'll mention some things that people have tried.

None convergence: The model parameter oscillate, destabilize and never converge. Cost function may not converge by using gradient descent in a common minimize game.

Model Collapse: The gradient collapse which produce limited varieties of sample.

Diminished gradient: The discriminator gets too successful that the generator gradient vanished and learns nothing.

- Unbalanced between generator and discriminator causing overfitting

- Highly sensitive to select hyperparamter

- Text-to-Image Translation

- https://www.datacamp.com/community/tutorials/generative-adversarial-networks

- https://pathmind.com/wiki/generative-adversarial-network-gan